[1]:

%load_ext autoreload

%autoreload 2

[2]:

from IPython.display import HTML, display, Image

import warnings

warnings.filterwarnings('ignore')

Advanced evaluation

In this tutorial we evaluate probesets in finer detail: besides the summary values for each metric (see basic evaluation tutorial) we can get e.g. per gene and per cell type information of each evaluation.

Import packages and setup

[3]:

import pandas as pd

import scanpy as sc

import spapros as sp

import matplotlib.pyplot as plt

[4]:

sc.settings.verbosity = 1

sc.logging.print_header()

print(f"spapros=={sp.__version__}")

scanpy==1.10.0 anndata==0.10.6 umap==0.5.5 numpy==1.26.4 scipy==1.12.0 pandas==1.5.3 scikit-learn==1.4.1.post1 statsmodels==0.14.1 igraph==0.11.4 pynndescent==0.5.11

spapros==0.1.5

Load dataset

[5]:

adata = sc.datasets.pbmc3k()

adata_tmp = sc.datasets.pbmc3k_processed()

# Get infos from the processed dataset

adata = adata[adata_tmp.obs_names, adata_tmp.var_names]

adata.obs['celltype'] = adata_tmp.obs['louvain']

adata.obsm['X_umap'] = adata_tmp.obsm['X_umap']

del adata_tmp

# Preprocess counts and get highly variable genes

sc.pp.normalize_total(adata)

sc.pp.log1p(adata)

sc.pp.highly_variable_genes(adata, flavor="cell_ranger", n_top_genes=1000)

adata

[5]:

AnnData object with n_obs × n_vars = 2638 × 1838

obs: 'celltype'

var: 'gene_ids', 'highly_variable', 'means', 'dispersions', 'dispersions_norm'

uns: 'log1p', 'hvg'

obsm: 'X_umap'

2. Set up the ProbesetEvaluator

[6]:

# initialize a ProbesetEvaluator

evaluator = sp.ev.ProbesetEvaluator(

adata,

scheme="full",

marker_list="../../data/pbmc3k_marker_list.csv",

verbosity=2,

results_dir=None

)

# The pbmc3k_marker_list.csv includes the following data:

# pd.DataFrame(

# data={

# "CD4 T cells" :["IL7R", None],

# "CD14+ Monocytes" :["CD14", "LYZ"],

# "B cells" :["MS4A1", None],

# "CD8 T cells" :["CD8A", None],

# "NK cells" :["GNLY", "NKG7"],

# "FCGR3A+ Monocytes" :["FCGR3A", "MS4A7"],

# "Dendritic Cells" :["FCER1A", "CST3"],

# "Megakaryocytes" :["NAPA-AS1", "PPBP"],

# },

# ).to_csv("../../data/pbmc3k_marker_list.csv", index=False)

3. Run evaluation methods

[7]:

# select reference probesets with basic selection methods

selections = sp.se.select_reference_probesets(adata, n=20)

# Take Spapros genes from basic selection tutorial

genes = [

'PF4', 'HLA-DPB1', 'FCGR3A', 'GZMB', 'CCL5', 'S100A8', 'IL32', 'HLA-DQA1', 'NKG7', 'AIF1', 'CD79A', 'LTB', 'TYROBP',

'HLA-DMA', 'GZMK', 'HLA-DRB1', 'FCN1', 'S100A11', 'GNLY', 'GZMH'

]

# we add it to the dictionary of probesets

selections["spapros"] = pd.DataFrame({"selection": adata.var_names.isin(genes)}, index=adata.var_names)

[8]:

# now start the evaluation for each of the collected probesets

for probeset_name, probeset_df in selections.items():

gene_list = probeset_df.index[probeset_df["selection"]].to_list()

evaluator.evaluate_probeset(gene_list, set_id=probeset_name)

OMP: Info #276: omp_set_nested routine deprecated, please use omp_set_max_active_levels instead.

The following cell types are not included in forest classifications since they have fewer than 40 cells: ['Dendritic cells', 'Megakaryocytes']

The following cell types are not included in forest classifications since they have fewer than 40 cells: ['Dendritic cells', 'Megakaryocytes']

The following cell types are not included in forest classifications since they have fewer than 40 cells: ['Dendritic cells', 'Megakaryocytes']

The following cell types are not included in forest classifications since they have fewer than 40 cells: ['Dendritic cells', 'Megakaryocytes']

The following cell types are not included in forest classifications since they have fewer than 40 cells: ['Dendritic cells', 'Megakaryocytes']

There are maximally four steps to calculate for each metric: 1. shared computations: this step is only needed once for all probe sets (e.g. clusterings on the reference data that includes all genes) 2. pre computations: this step is run for each probe set, but it’s independent of the shared results of step 1 3. main computations: this step is run for each probe set and depends on the shared results of step 1 (if there was a step 1 for a given metric) and potentially the pre results of step 2 4. summary computations: extract the final metric value as a summary statistic

In the above progress bars you can see that the shared computations take longer for the first probe set. For the following probe sets, the shared computations are reused.

Not all metrics include all 4 steps. E.g. forest classification simply has a main computation step, as it doesn’t require any shared computations.

[9]:

evaluator.summary_results

[9]:

| cluster_similarity nmi_5_20 | cluster_similarity nmi_21_60 | knn_overlap mean_overlap_AUC | forest_clfs accuracy | forest_clfs perct acc > 0.8 | gene_corr 1 - mean | gene_corr perct max < 0.8 | marker_corr per marker | marker_corr per celltype | marker_corr per marker mean > 0.025 | |

|---|---|---|---|---|---|---|---|---|---|---|

| PCA | 0.683315 | 0.533974 | 0.293958 | 0.887955 | 0.897735 | 0.820372 | 1.000000 | 0.454319 | 0.555655 | 0.573841 |

| spapros | 0.721423 | 0.553337 | 0.167580 | 0.923666 | 0.990460 | 0.772479 | 0.993647 | 0.703430 | 0.902092 | 0.902092 |

| HVG | 0.495991 | 0.408694 | 0.056358 | 0.819195 | 0.720651 | 0.840755 | 0.900000 | 0.591106 | 0.766929 | 0.753666 |

| random (seed=0) | 0.243881 | 0.255338 | 0.023879 | 0.575532 | 0.007778 | 0.976067 | 1.000000 | 0.229733 | 0.290810 | 0.290023 |

| DE | 0.712277 | 0.545842 | 0.160027 | 0.916620 | 0.955688 | 0.757868 | 0.792178 | 0.672017 | 0.863489 | 0.863489 |

Explanations of the metrics:

Variation recovery metrics: - cluster_similarity nmi_5_20: Assess the similarity of clustering the dataset on all genes vs just the selected gene set. Clusterings are calculated with different leiden resolutions to genertate clusterings of n = 5 to 20 clusters. The NMI is calculated between the clusterings of the full dataset and the gene set. Finally the average NMI over the different n’s is calculated. This metric measures how well the clustering structure of the dataset is recovered with a gene subset. As n is chosen to be between 5 and 20 this metric assess clustering structure on a coarse level. - cluster_similarity nmi_21_60: Same as above but with n = 21 to 60 clusters. This metric assesses clustering structure on a finer level. - knn_overlap mean_overlap_AUC: Similar to cluster_similarity this metric assesses how well the dataset’s structure is recovered with a gene subset. It looks at even finer variation recovery than cluster_similarity nmi_21_60 as it assesses the overlap of each cell’s k nearest neighbors of the full dataset knn graph and the gene set knn graph.

Cell type classification metrics: - forest_clfs accuracy: This metric assesses how well a random forest classifier can predict cell types based on the gene set. The metric is the mean accuracy of the classifier over all cell types. - forest_clfs perct acc > 0.8: This metric assesses how many cell types can be predicted with an accuracy of at least 0.8. More specifically the transformation function is not a sharp step function at 0.8 but instead at step function with a linear increase from 0 to 1 between 0.75 and 0.85 (i.e. smoothed thresholding). The metric gives an idea about how many cell types can be identified with high confidence with the given gene set.

Gene redundancy metrics: - gene_corr 1 - mean: Mean over maximum correlations of each gene with all other genes. This metric assesses how redundant the gene set is. Note that the actual value is 1 - the mean correlations which means the higher the value the less redundant the gene set is. - gene_corr perct max < 0.8: Percentage of genes that have a maximum correlation of less than 0.8 with all other genes. This metric gives an idea about how many genes show unique expression profiles in the gene set. The reasoning behind introducing this metric with the 0.8 threshold is that genes that are only “somewhat correlated” (e.g. r=0.5) could still encode for different information and thus be useful in a gene set, while genes that are highly correlated (e.g. r=0.9) are likely redundant. Note however, that redundancy is not necessarily bad, as it can be used to increase robustness of the gene set.

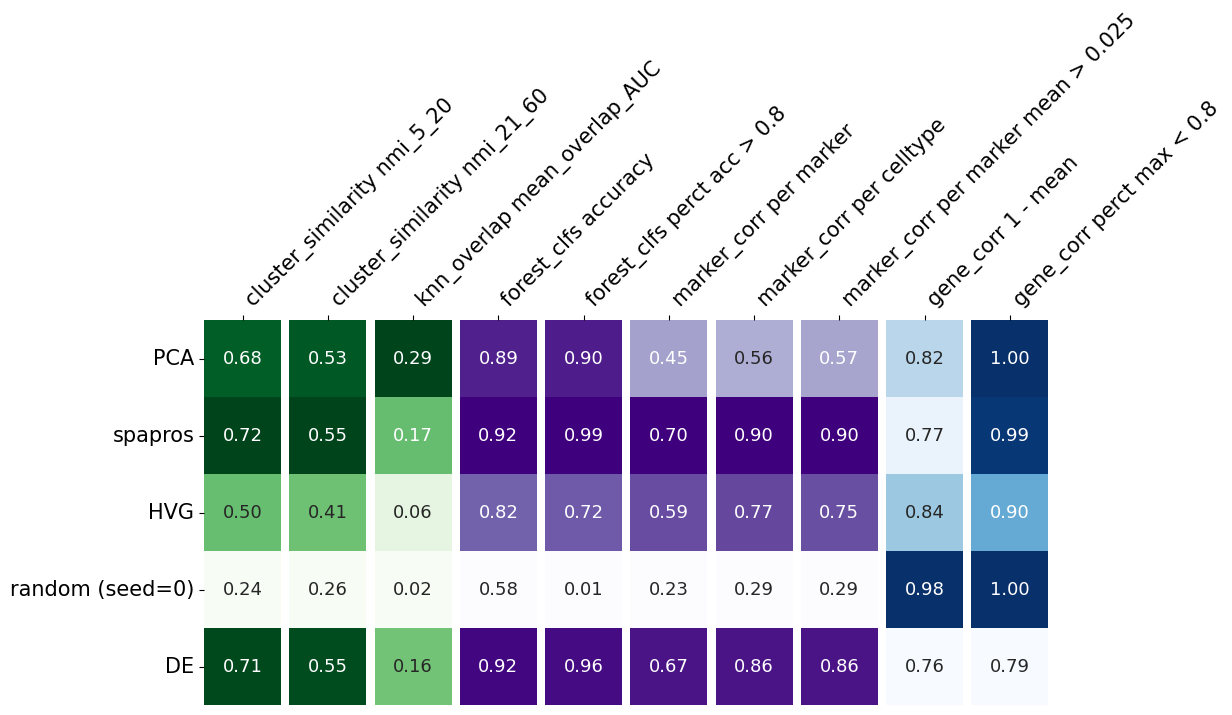

4. Visualize the evaluation results

[10]:

#evaluator.summary_statistics(set_ids=selections.keys())

evaluator.plot_summary()

I this plot, each row summarizes the evaluation metrics of a given gene set. The metrics evaluating the variability recovery are shown in the green columns (1-3). The clustering similarity (coarse level clustering: 5-20, fine level clustering: 21-60) is evaluated by the normalized mutual information (NMI) of the clustering of the selected probeset and the clustering on the full gene set. High scores means that the cells build similar cluster on the gene subset as on the full gene set.

Both NMI values are highest for the pca based selection, which indicates that this method recovers the variability best in this comparison.

Even more fine grained variation recovery is measured with the third column (knn_overlap). It measures the neighbors overlap of the knn graphs of the full gene set and the respective probeset.

The subsequent purple columns (4-8) indicate the performance of each selection method with respect to cell type identification. Column 4 contains the mean classification accuracy of a random forest prediction over cell types. This quantifies the overall classification success while column 5 provides an estimate for the percentage of reliably captured cell types, i.e. the average of a smoothed thresholding of classification performance around 0.8 for each cell type. The other 3 purple columns (6-8) derive from the comparison of the correlation within the selected probeset with the correlation within the marker list.

The last two, blue columns (9, 10) evaluate the correlation within a selected probeset. If genes with highly correlated expression are selected, the information content could also be provided by just one of them.

Evaluations beyond summary metrics

The summary metrics are useful to get an overview of the performance of the gene sets. However, to understand the performance in more detail, we can look into more specific evaluation scores that were calculated on the run and still accessible in the ProbesetEvaluator object.

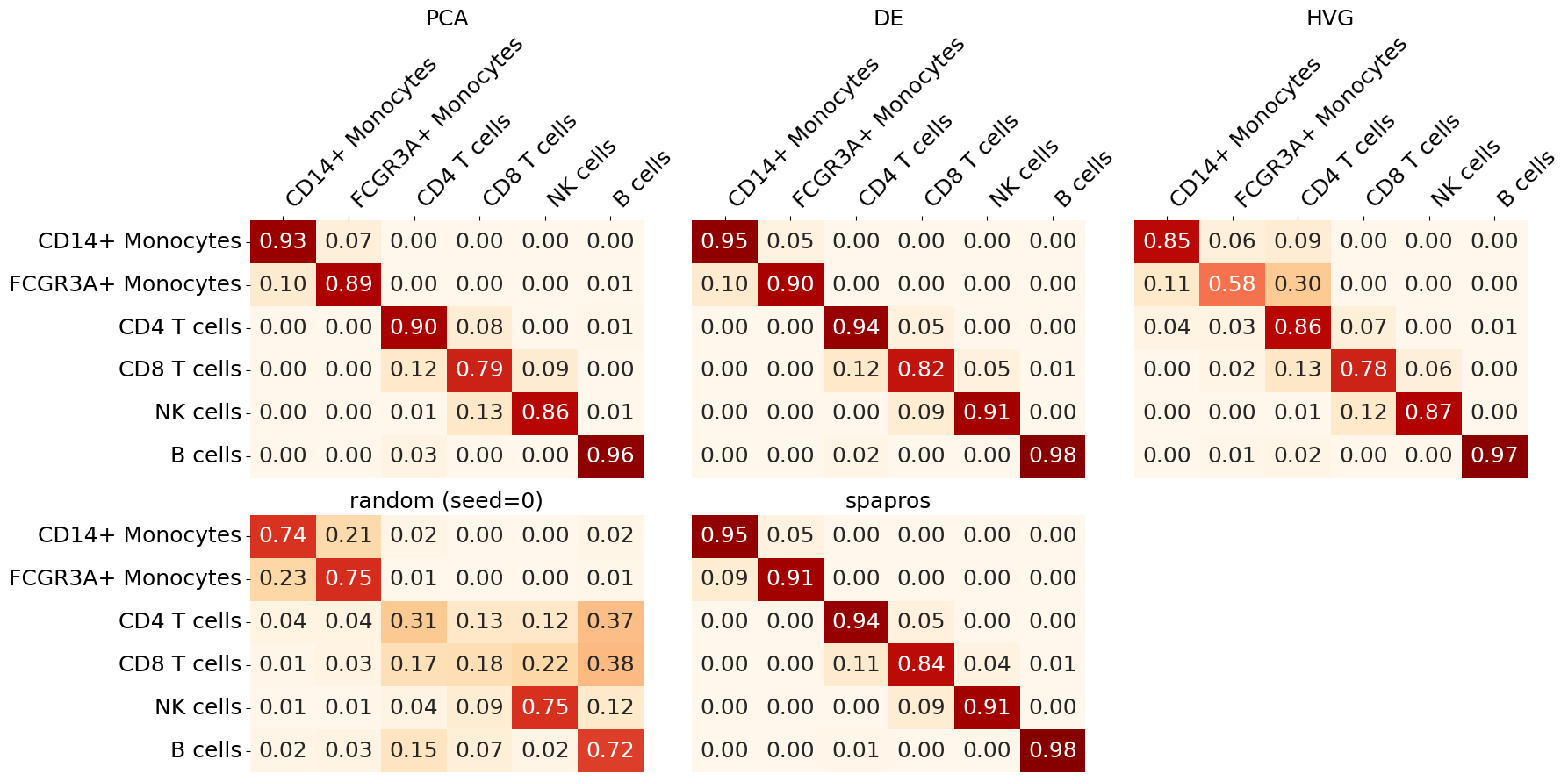

cell type identification

To see and compare how well each cell type can be classified with different gene sets we plot the cell type classification confusion matrices. The confusion matrix shows how many cells of a given cell type were classified as another cell type. The diagonal of the matrix shows the correct classification rate. The better the classification the more the diagonal is filled with high values.

[11]:

evaluator.plot_confusion_matrix()

We can oberserve that CD8 T cells are the most difficult to classify across gene sets. The highest classification accuracy for all cell types in this example is achieved with the Spapros selection.

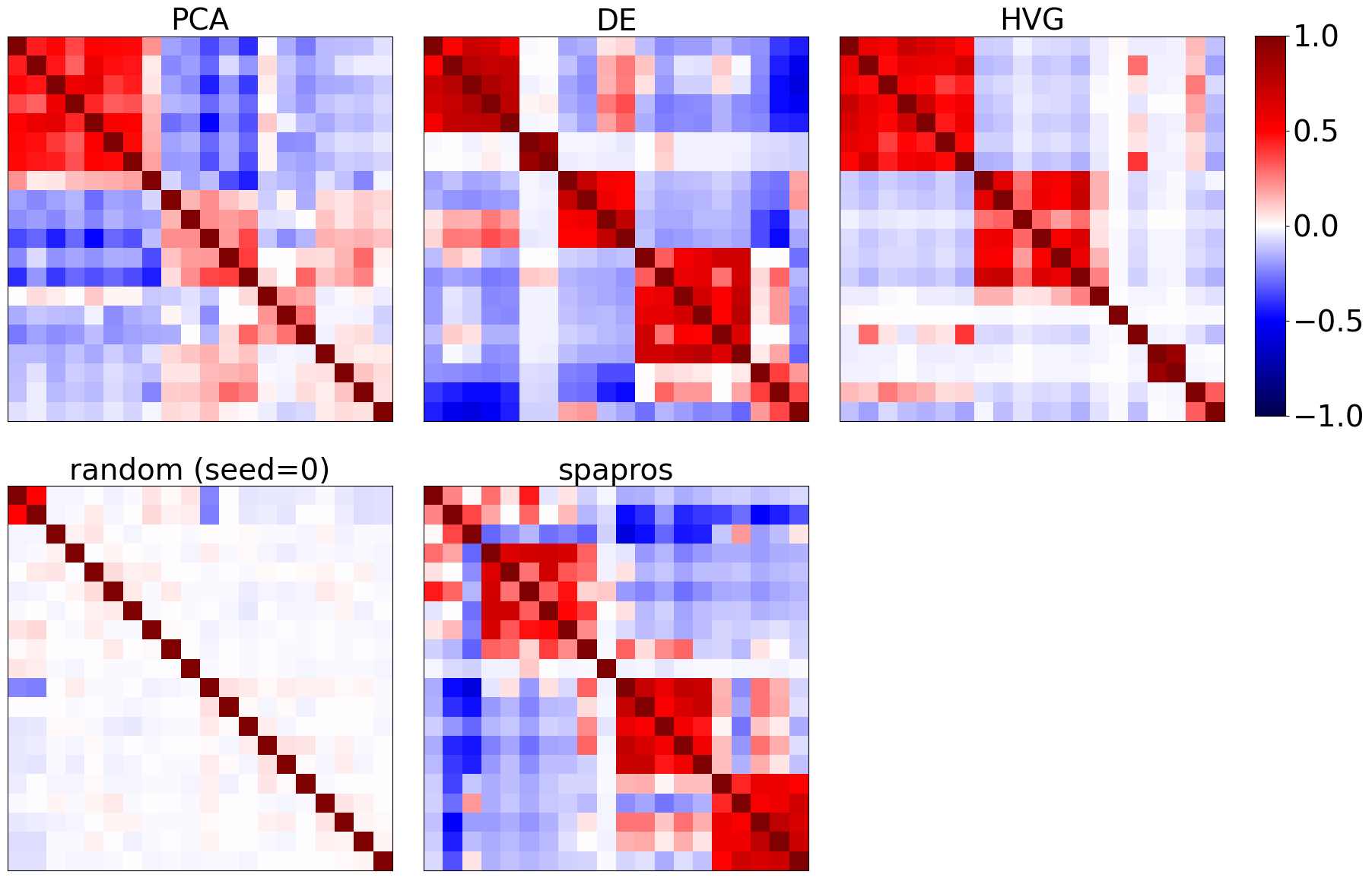

Gene redundancy

We can look into the coexpression patterns of genes by looking at the correlation matrix of the selected genes. Ideally we find a few correlative modules. If there is a correlative module that’s very large compared to the others, then a high capacity of the gene set is wasted on redundant information.

[13]:

evaluator.plot_coexpression()

clustering similarity

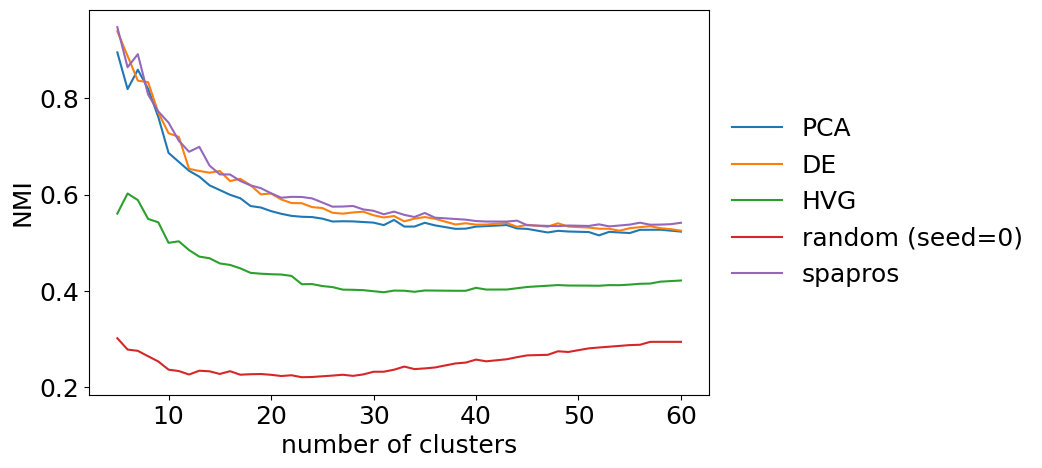

For the clustering similarity we can look at the similarity (nmi) between the gene set and the full gene set for different total numbers of cluster. This can give us an idea if a gene set is for example more capable to recover coarse clustering structure than fine clustering structure. For larger datasets such behavior could be expected e.g. for DE selections (coarse clustering focused) and PCA based selections (fine clustering focused). Note that this fine level analysis is mainly useful when comparing gene sets.

[14]:

evaluator.plot_cluster_similarity()

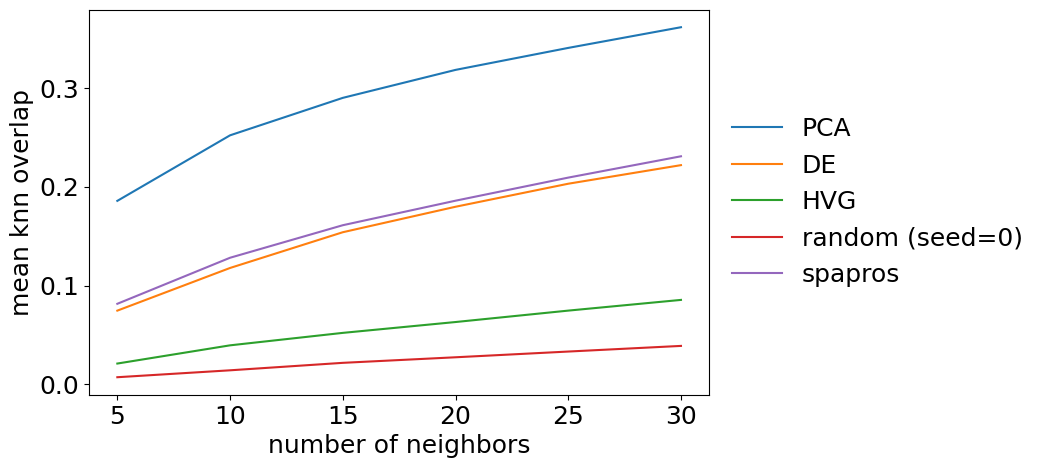

neighborhood similarity

The detailed evaluation on neighborhood similarity is more of a sanity check than providing additional information. We can plot the overlap for different k’s of k nearest neighbors graphs. The summary metric is the mean over all k. The metric should show a very stable increase with k for each gene set.

[15]:

evaluator.plot_knn_overlap()